Jason Crawford asks, “What’s the crux between EA and progress studies?” This is the second in a series of posts on the question from a few different angles. See Part 1 here (link).

I have some apprehensions in writing this post. They’re mostly because it would be easy to tell a great story of a grand philosophical clash of ideas: Progress advocates driving the gears of history forward on one side, and Effective Altruists desperately trying to grind them to a halt. But a story like that just wouldn’t be true to the actual disagreements at hand.

Telling the story of a grand conflict wouldn’t speak to the diversity of opinions in each community. It wouldn’t acknowledge the humility on both sides. It wouldn’t acknowledge that there’s been insignificant engagement between EA and Progress to flesh out core disagreements. And it wouldn’t acknowledge the degree to which matters of mood and emphasis blend into the “truly” philosophical disagreements. So I want to write this post to acknowledge these realities, while trying to draw out some current points of disagreements.

So first, do they disagree at all?

Mike McCormick likes to quip that “Progress is EA for neoliberals.” And I think that there is a lot of truth to this! To a certain extent, Progress Studies and EA are different moods, one that feels libertarian and another that feels left-leaning [1]. This is especially evident when one considers that early EA efforts were highly focused on redistribution and economic growth was essentially non-existent in the discussion. Per Cowen:

Not too long before he died, [Derek] Parfit gave a talk. I think it’s still on YouTube. I think it was at Oxford. It was on effective altruism. He spoke maybe for 90 minutes, and he never once mentioned economic growth, never talked about gains in emerging economies, never mentioned China.

I’m not sure he said anything in the talk that was wrong, but that omission strikes me as so badly wrong that the whole talk was misleading. It was all about redistribution, which I think has a role, but economic growth is much better when you can get it. So, not knowing enough about some of the social sciences and seeing the import of growth is where he was most wrong.

Based on the political demography of EA, we should expect there to be some persistence in this neglect of economic growth [2]. According to the 2019 survey, 72% affiliated with the Left or Center-Left politically.

I’m not sure the Progress Community would look that different, but I do think Growth is clearly more salient for them.

These moods lead to differences in emphasis. Cowen, in his discussion with Robin Wiblin, mentions that earlier drafts of Stubborn Attachments had more discussion of existential risks, but he took them out because he thought growth had become the more underrated part of the discussion.

So the disagreement between Progress and EA might be more like Progress advocates reminding EAs about economic growth. Or in McCormick’s words, to be a bit more neoliberal. And EA might just be reminding them not to cause the apocalypse. Which one you choose might end up just being a matter of your mood affiliation.

Asking the big question

If this were 2014, I would argue the above characterization as being pretty fair (though Progress Studies would not have formally existed). But since then, the EA community has become increasingly focused on longtermism, a cluster of theories that emphasize the importance of wellbeing not just for sentient creatures that exist today but those that will exist in the future. Will MacAskill informally defines it as “the view that positively influencing the longterm future is a key moral priority of our time.”

This transition has increased EA interest in existential risk (XR, x-risk), catastrophic risk, and different future scenarios. It’s also led to a debate about whether we’re in a unique “time of perils,” where risks are unusually high. It’s this instantiation of EA that Progress has some natural tension with.

The problem with turning this into a full-fledged philosophical conflict is that there’s a lot of uncertainty about the relationship between existential risk and growth, even on the EA side of things. It’s just a new area of inquiry. My sense is that a lot of people suspect someone to have worked out a good theory of it, but the work has just begun.

In his post, Crawford asks, “Does XR consider tech progress default-good or default-bad?” This is sort of halfway between a mood question and an empirical question [3]; we have attitudes toward technological development that are only partially related to our predictions about technological development. I would even guess that some of the people I know who are most excited about technology also have higher subjective probabilities of tech-driven dystopian and catastrophic scenarios than people that don’t care for technology.

I think EAs and the Progress Community would agree that tech progress is good if progress in safety inevitably outpaces progress in destructive capabilities (though some EAs might still wish to stop tech progress entirely to focus on safety depending on the rate of safety). But a large part of the question is going to be answered by what is true about the nature of technological progress.

Again, because there’s a lot of uncertainty about the nature of technological progress on both sides, I don’t think we should overstate the existence of a big argument. If we knew it would soon bring the apocalypse, nobody would be in favor. If we knew it would prevent it, nobody would be in opposition. And few people have indifference curves on which they would trade off between risk and growth, so even saying that Progress advocates are willing to take more risk for more growth compared to EAs seems a bit premature.

Also, I keep writing “the nature of technological progress” but I’m not even sure that’s a real thing. It could be highly path dependent. It could depend on cultures or individual contributors. There’s been some general models proposed: the EA-oriented philosopher Nick Bostrom writes about technological progress as an urn out of which we are drawing balls (which represent ideas, discoveries, and innovations). White balls are good or innocuous; they’re most of what we’ve pulled out so far. But there are also grey ones, which can be moderately harmful or bring mixed blessings. Nuclear bombs were a grey ball, maybe CRISPR too. But Bostrom hypothesizes that there are also black balls, technologies which inevitably destroy civilization when they’re discovered.

Bostrom could be right. But there also could be safety balls that make the world much safer (destroys black balls, so to speak)! Maybe it’s easy to build spaceships fit to colonize the galaxy. Maybe it’s easy to create Dune-style shields. Maybe there’s something that ends the possibility of nuclear war. MRNA vaccine technology clearly is sort of like a safety ball, as it lowers pandemic risk.

Clearly this is an area that urgently needs further research, but it isn’t even clear what expectations for the research should be. Will we gain better insight into safety balls and their relative frequency to black balls? Will we figure out if and how their relative frequencies change over time? It doesn’t seem like the sort of thing we’ll be able to figure out, but it’s really the core of the question [4].

So while I view the questions here as incredibly important, I don’t see them as points of disagreement. The actual points of disagreement right now are more on the margin: would we be better off with technological progress moving a bit more slowly or a bit more quickly? How much should the Precautionary Principle guide us?

Timelines

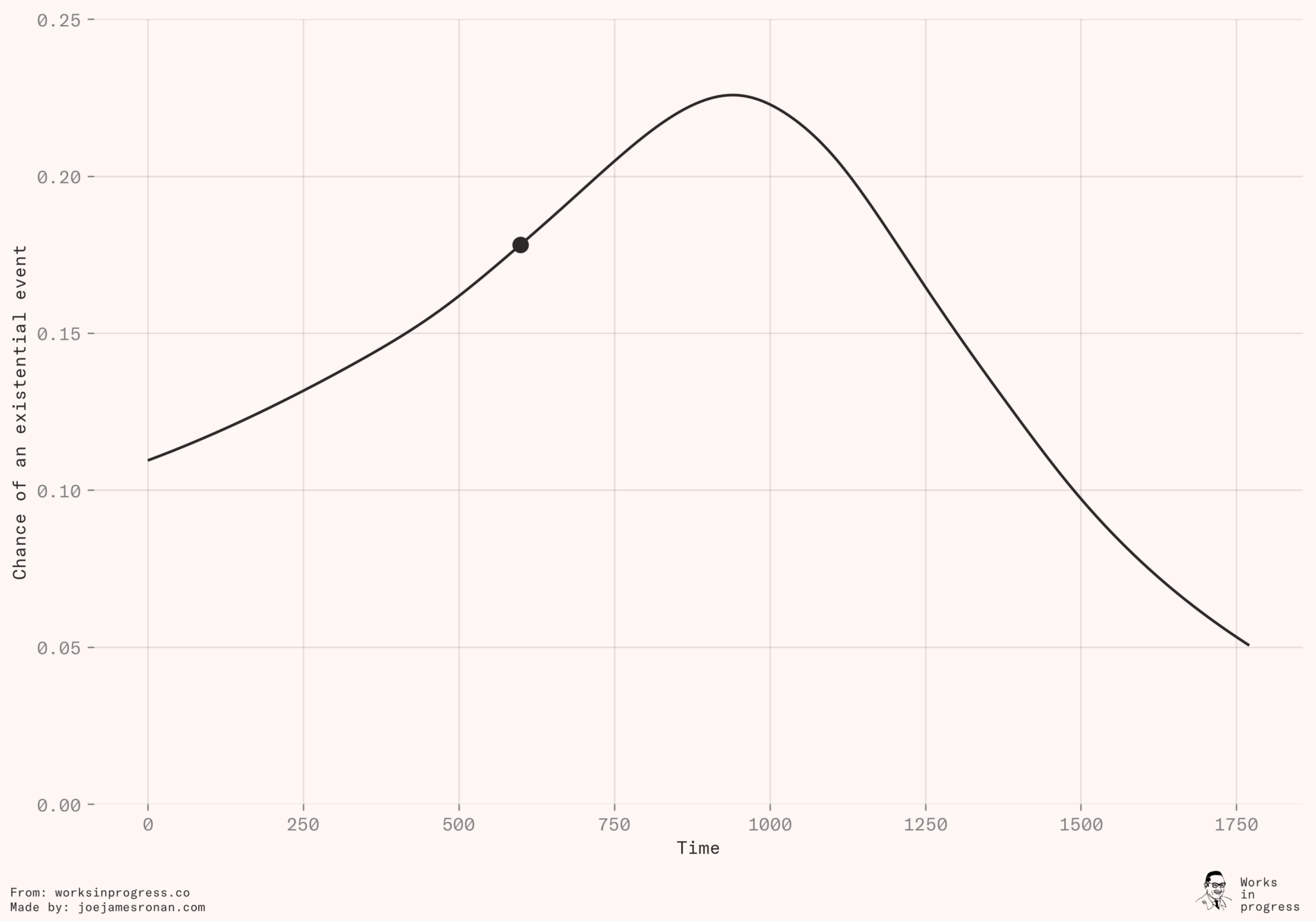

Perhaps the most prominent contribution to the growth vs x-risk question was made by Leopold Aschenbrenner. He argues that technological progress (really, economic growth) is by default good, because it propels us through our current time of perils into a safer world.

But even if Aschenbrenner’s model is correct, that we need to grow through our current time of perils to bring about a safer world, it makes for a poor argument against EA. If some talent and capital is willing to start overinvesting in safety early, they should obviously do so. That’s EA. To really argue against EA, Progress advocates would need to argue that EA is overrating x-risk. That would be a hard argument to make! It seems pretty clear that the marginal person who is willing to work on either safety or growth should be working on safety. That’s essentially the EA Longtermist position.

As Tyler Cowen said in a speech he gave at Stanford, “The longer a time horizon you have in mind, the more you should be concerned with sustainability” (in the EA sense of lower x-risk). That is to say, if humanity might be able to survive and flourish until the heat death of the Universe (or even a few million years), we should be obsessed with preventing extinction; we have time for many more industrial revolutions and ten thousand year growth cycles if that’s the case; if there’s a (non-extinction) civilizational collapse, maybe next time will go better. We just need to make sure we don’t mess up so badly we go completely extinct and we have resources around to with which we can reindustrialize. Even a chance at creating that future is so important that growth in the next few hundred years is meaningless.

Just as growth loses its importance on such a long time horizon, it also loses its importance on a short horizon: if the world is ending next year, who cares about compounding returns?

Ironically, as Cowen argues, Progress as a movement to raise the growth rate makes the most sense if you think we are doomed to extinction, but still have a few hundred years of runway left. It’s enough time that the compound returns of growth really matter for human flourishing, and we don’t have to worry that much about x-risk because there was never a chance at the galactic civilization anyways. For example, you might think there’s a .1% chance of human extinction any given year given all the x-risks. That would imply there’s about a 90% chance of surviving the next 100 years, and about a 37% chance of surviving the next 1000 years. The estimate Cowen gives in the Stanford speech is a life expectancy of 600-700 years.

Considering “small” risks

There’s two potential responses from Progress: The first is a model like Aschenbrenner’s, that suggests after a certain amount of compounding growth x-risk becomes infinitesimal. In this case, Progress advocates are sort of “right by chance” that our best path forward is to simply raise the rate of growth.

The second is for Progress to just reject EA as too Pascalian. For the unfamiliar, I’m referring to Pascal’s Wager, where it was argued that believing in God is rational because the costs to doing so are low (belief) and the consequences for failing to do so (Hell) are immense. So believing in God has a high expected value (EV) even if the probability of God existing is very low. While some people see Pascalian logic as true, and only “feeling wrong,” because of something like scope insensitivity, it’s usually seen as EV-gone-awry [5]. Even leaders in EA like Eliezer Yudkowsky and Holden Karnofsky tend to reject Pascalian logic, as Crawford notes in his post. My reading of this is that Yudkowsky and Karnofsky see the probabilities they are considering as far higher than any Pascalian range, at least a few percent and probably in the 10s of percent.

Consider a hypothetical set of policies that would cause us to grow at 1%. If we adopted this set of policies, we would survive the next 1000 years with a 2% probability. If we rejected them, we could grow at 2.5%, but would have a 1% chance of surviving the next 1000 years. And if we survived the next 1000 years (the length of this hypothetical time of perils), we would create a stable galactic civilization where trillions of trillions of people would live flourishing lives for the next 10100 years. In any EV calculation, the amount of EV generated by growing at 1% vs 2.5% would pale in comparison to even the 2% chance at more +EV than is worth trying to describe. But in the modal outcome of both scenarios, the 2.5% growth world would be much better than the 1% growth world. Cowen has described betting on futures less than 2% as Pascalian, so it’s unclear whether he would be willing to adopt the policy set [6], [7].

But for others, 2% might still be in the realm of reasonable cost/benefit calculations. 2% is, after all, about your chance of getting into Y Combinator. There’s no agreed upon probability at which an EV calculation becomes Pascalian, but 2% certainly seems like an upper-bound. But regardless, if there is any chance that we could have a galactic civilization at a number where you don’t feel mugged, the expected value is obviously there to invest marginal resources in safety instead of growth (if not to actively slow down progress altogether).

Also, I would be surprised if most people in the Progress Community were comfortable with 700 years as the modal outcome for humanity. I think most people interested in Progress also want a Galactic Civilization. So we should be thinking about whether there’s a safer way to get there.

The question of AGI

To make this all a bit more grounded, we can consider what artificial general intelligence (AGI) scenarios would justify Progress and which would justify EA. AI is a particularly interesting example here, even relative to other x-risks, because it could be a massive driver of economic growth but it also substantially raises x-risk [8].

Define “likely” and “not likely” as the lowest probability at which you do not feel that you are being Pascalian. Keep in mind two surveys found that AI experts believe the probability of AI by 2100 is between 70% and 80%. A much more conservative report from Tom Davidson at OpenPhil estimates “pr(AGI by 2100) ranges from 5% to 35%, with [a] central estimate around 20%.”

In the top left quadrant, we are in an AGI situation analogous to Cowen’s world ending next year: Just as we wouldn’t worry about economic growth if the world is ending next year, we need not worry about economic growth if AGI will soon bring exponential growth. If we’re in that world, the Progress enthusiast might as well kick back and relax.

On the other hand, if AGI is likely in the next 100 years and alignment is not likely, EAs have simply won the argument. We should heed Eliezer Yudkowsky and utilize every ounce of human and financial capital to avert unfriendly AGI. From my vantage, it’s clear that members of the Progress community (including myself) haven’t spent enough time deciding where they fall on this. It seems like the most fundamental practical disagreement between Progress and EA, but one that has had almost no debate.

This too seems like more a matter of mood than substance: Plenty of people want to talk about AI, so we’ll be the ones to talk about the industrial revolution. And there’s a more defensible version, that Progress doesn’t have a comparative advantage in discussing AI. But if AI is the central determinate about long-run growth, we need to be talking about it more! It might be the only important thing.

The Progress movement as it currently exists only truly makes sense in a world where AGI isn’t possible on a short timeline (See also: The Irony of “Longtermism"). I use 100 years, but really it’s any amount of time that compounding returns start really paying off. In these worlds, we should care about making the world better for our children and grandchildren. However, even then, it doesn’t really make sense to pull marginal resources away from AI safety work. It’s also not likely, based on our best current estimates, that we are in either of these worlds.

Final thoughts

At the moment, I’m not sure there’s any true philosophical conflict between Progress and EA. I think there’s a lot of disagreement about points of emphasis, different attitudes towards precaution, and different suspicions about the nature of technological progress.

Perhaps the best argument here for Progress advocates is that this longtermist framing is wrong entirely. All the big risks are actually unknown unknowns, so safety work on current margins is going to be inevitably misplaced. What we should be doing is driving the engine of progress forward and building up institutions so that we can quickly react to x-risks when they actually begin to materialize.

It leads us to a view I am currently most sympathetic with: that longtermism is philosophically true but is a poor action-guiding principle: it causes one to waste too much time and pursue too many dead ends that being a Longtermist isn’t even +EV. And because of that, we should focus our efforts on finding solutions to the specific problems that confront us on our current frontier. [9]

Still, on the whole, I don’t think Progress is winning any arguments against EA [10]. This is arguing on the wrong margin, though. As I said in the beginning, I think people are attracted to this argument because it looks like a Grand Intellectual War between two of our most interesting intellectual movements. But making it a war misses the point. Both groups should be much larger. Both groups share a lot of the same concerns. Both want to work on similar issues. Which raises the question: How should we be working together?

[1] Another way of getting at the left-leaning mood would be to make reference to EA’s interest in top-down control. This has been stated very plainly in terms of “steering” or Bostrom’s Singleton. I remain agnostic here to whether those are good ideas and simply note their political feel. [11]

[2] I note the emergence of longtermism as the central tenant of EA has changed this.

[3] To be clear, I don’t mean “mood question” in a dismissive sense. The Great Stagnation (and Industrial Revolution!) might have been caused by changes of mood, so mood might be one of the most important forces in human history! We should be invested in changing mood in ways congruent with optimal outcomes. But we also shouldn’t be over-invested in small differences in mood that are not consequentially important.

[4] I suspect we’re going to work around the question of the nature of technological development by thinking more about what the existence of black balls suggests about how we should structure scientific and political institutions. The Progress Community needs to be thinking about how we can structure institutions so people avoid black balls even if we’re not going to become EAs.

[5] Theological issues aside, obviously.

[6] A number of these positions were ascertained in conversation with Cowen and any misrepresentations are a result of my misunderstanding.

[7] Cowen seems to believe that matters of extinction are categorically separate from normal EV calculations. It should also be said that Cowen may also reject a question like this as being too far removed from our choice set.

[8] I’m setting aside the geopolitical dimension and “value lock-in” issues of who discovers AGI for simplicity here, but one’s opinion of those issues would change the calculation.

[9] It may be worth developing a Growth Based Prescription to X Risk. Please reach out if you’re interested in collaborating on this.

[10] The best engagement I have seen with Progress from EA is Holden Karnofsky in this post (See “rowing”).

[11] Sorry about the unlinked footnotes. Will fix.